Archive for the ‘semantic web’ Category

Making OpenCharities even better… more features, more data, more charities

I had a fantastic response to the launch of OpenCharities — my little side project to open up the Charity Commission’s Register of Charities — from individuals, from organisations representing the third sector, and from charities themselves.

There were also a few questions:

- Could we pull out and expose via the api more info about the charities, especially the financial history?

- How often would OpenCharities be updated and what about new charities added after we’d scraped the register?

- Was there any possibility that we could add additional information from sources other than the Charity Register?

So, over the past week or so, we’ve been busy trying to answer those questions the best we could, mainly by just trying to get on and solve them.

First, additional info. After a terrifically illuminating meeting with Karl and David from NCVO, I had a much better idea of how the charity sector is structured, and what sort of information would be useful to people.

So the first thing I did was to rewrite the scraper and parser to pull in a lot more information, particularly the past 5 years income and spending and, for bigger charities the breakdown of that income and spending. (I also pulled in the remaining charities that had been missed the first time around, including removed charities.) Here’s what the NSPCC’s entry, for example, looks like now:

We are also now getting the list of trustees, and links to the accounts and Summary Information Returns, as there’s all sorts of goodness locked up in those PDFs.

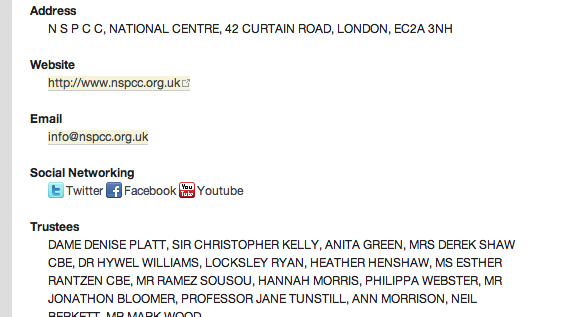

However, while we running through the all these charities, we wondered if any of them had social networking info easily available (i.e. on their front page). It turns out some of the bigger ones did, and so we visited their sites and pulled out that info (it’s fairly easy to look for links for twitter/facebook/youtube etc on a home page). Here’s an example social networking info, again for the NSPCC.

[Incidentally, doing this threw up some errors in the Charity Register, most commonly websites that are listed as http://http://some.charity.org.uk, which in itself shows the benefit of opening up the data. All we need now is a way of communicating that to the Charity Commission.]

We also (after way too many hours wasted messing around with cookies and hidden form fields) figured out how to get the list of charities recently added, with the result that we can check every night for new charities added in the past 24 hours, and add those to the database.

This means not only can we keep OpenCharities up to date, it also means we can offer an RSS feed of the latest charities. And if that’s updated a bit too frequently for you (some days there are over 20 charities added), you can always restrict to a given search term, e.g http://OpenCharities/charities.rss?term=children for those charities with children in the title.

Finally, we’ve been looking at what other datasets we could link with the register, and I thought a good one might be the list of grants given out by the various National Lottery funding bodies (which fortunately had already been scraped by the very talented Julian Todd using ScraperWiki).

Then it was a fairly simple matter of tying together the recipients with the register, and voila, you have something like this:

Note, at the time of writing, the import and match of the data is still going on, but should be finished by the end of today.

We’ll also add some simple functionality to show payments from local councils that’s being published in the local council spending data. The information’s already in the database (and is actually shown on the OpenlyLocal page for the charity); I just haven’t got around to displaying it on OpenCharities yet. Expect that to appear in the next day or so.

C

p.s. Big thanks to @ldodds and @pigsonthewing for helping with the RDF and microformats respectively

Introducing OpenCharities: Opening up the Charities Register

A couple of weeks ago I needed a list of all the charities in the UK and their registration numbers so that I could try to match them up to the local council spending data OpenlyLocal is aggregating and trying to make sense of. A fairly simple request, you’d think, especially in this new world of transparency and open data, and for a dataset that’s uncontentious.

Well, you’d be wrong. There’s nothing at data.gov.uk, nothing at CKAN and nothing on the Charity Commission website, and in fact you can’t even see the whole register on the website, just the first 500 results of any search/category. Here’s what the Charities Commission says on their website (NB: extract below is truncated):

The Commission can provide an electronic copy in discharge of its duty to provide a legible copy of publicly available information if the person requesting the copy is happy to receive it in that form. There is no obligation on the Commission to provide a copy in this form…

The Commission will not provide an electronic copy of any material subject to Crown copyright or to Crown database right unless it is satisfied… that the Requestor intends to re-use the information in an appropriate manner.

Hmmm. Time for Twitter to come to the rescue to check that some other independently minded person hasn’t already solved the problem. Nothing, but I did get pointed to this request for the data to be unlocked, with the very recent response by the Charity Commission, essentially saying, “Nope, we ain’t going to release it”:

For resource reasons we are not able to display the entire Register of Charities. Searches are therefore limited to 500 results… We cannot allow full access to all the data, held on the register, as there are limitations on the use of data extracted from the Register… However, we are happy to consider granting access to our records on receipt of a written request to the Departmental Record Officer

OK, so it seems as though they have no intention of making this data available anytime soon (I actually don’t buy that there are Intellectual Property or Data Privacy issues with making basic information about charities available, and if there really are this needs to be changed, pronto), so time for some screen-scraping. Turns out it’s a pretty difficult website to scrape, because it requires both cookies and javascript to work properly.

Try turning off both in your browser, and see how far you get, and then you’ll also get an idea of how difficult it is to use if you have accessibility issues – and check out their poor excuse for accessibility statement, i.e. tough luck.

Still, there’s usually a way, even if it does mean some pretty tortuous routes, and like the similarly inaccessible Birmingham City Council website, this is just the sort of challenge that stubborn so-and-so’s like me won’t give up on.

And the way to get the info seems to be through the geographical search (other routes relied upon Javascript), and although it was still problematic, it was doable. So, now we have an open data register of charities, incorporated into OpenlyLocal, and tied in to the spending data being published by councils.

And because this sort of thing is so easy, once you’ve got it in a database (Charity Commission take note), there are a couple of bonuses.

First, it was relatively easy to knock up a quick and very simple Sinatra application, OpenCharities:

If there’s any interest, I’ll add more features to it, but for now, it’s just a the simplest of things, a web application with a unique URL for every charity based on its charity number, and with the basic information for each charity is available as data (XML, JSON and RDF). It’s also searchable, and sortable by most recent income and spending, and for linked data people there are dereferenceable Resource URIs.

This is very much an alpha application: the design is very basic and it’s possible that there are a few charities missing – for two reasons. One: the Charity Commission kept timing out (think I managed to pick up all of those, and they should get picked up when I periodically run the scraper); and two: there appears to be a bug in the Charity Commission website, so that when there’s between 10 and 13 entries, only 10 are shown, but there is no way of seeing the additional ones. As a benchmark, there are currently 150,422 charities in the OpenCharities database.

It’s also worth mentioning that due to inconsistencies with the page structure, the income/spending data for some of the biggest charities is not yet in the system. I’ve worked out a fix, and the entries will be gradually updated, but only as they are re-scraped.

The second bonus is that the entire database is available to download and reuse (under an open, share-alike attribution licence). It’s a compressed CSV file, weighing in at just under 20MB for the compressed version, and should probably only attempted by those familiar with manipulating large datasets (don’t try opening it up in your spreadsheet, for example). I’m also in the process of importing it into Google Fusion Tables (it’s still churning away in the background) and will post a link when it’s done.

Now, back to that spending data.

Local spending data in OpenlyLocal, and some thoughts on standards

A couple of weeks ago Will Perrin and I, along with some feedback from the Local Public Data Panel on which we sit, came up with some guidelines for publishing local spending data. They were a first draft, based on a request by Camden council for some guidance, in light of the announcement that councils will have to start publishing details of spending over £500.

Now I’ve got strong opinions about standards: they should be developed from real world problems, by the people using them and should make life easier, not more difficult. It slightly concerned me that in this case I wasn’t actually using any of the spending data – mainly because I hadn’t got around to adding it in to OpenlyLocal yet.

This week, I remedied this, and pulled in the data from those authorities that had published their local spending data – Windsor & Maidenhead, the GLA and the London Borough of Richmond upon Thames. Now there’s a couple of sites (including Adrian Short’s Armchair Auditor, which focuses on spending categories) already pulling the Windsor & Maidenhead data but as far as I’m aware they don’t include the other two authorities, and this adds a different dimension to things, as you want to be able to compare the suppliers across authorities.

First, a few pages from OpenlyLocal showing how I’ve approached it (bear in mind they’re a very rough first draft, and I’m concentrating on the data rather than the presentation). You can see the biggest suppliers to a council right there on the council’s main page (e.g. Windsor & Maidenhead, GLA, Richmond):

Click through to more info gets you a pagination view of all suppliers (in Windsor & Maidenhead’s case there are over 2800 so far):

Clicking any of these will give you the details for that supplier, including all the payments to them:

And clicking on the amount will give you a page just with the transaction details, so it can be emailed to others

But we’re getting ahead of ourselves. The first job is to import the data from the CSV files into a database and this was where the first problems occurred. Not in the CSV format – which is not a problem, but in the consistency of data.

Take Windsor & Maidenhead (you should just be able to open these files an any spreadsheet program). Looking at each data set in turn and you find that there’s very little consistency – the earliest sets don’t have any dates and aggregate across a whole quarter (but do helpfully have the internal Supplier ID as well as the supplier name). Later sets have the transaction date (although in one the US date format is used, which could catch out those not looking at them manually), but omit supplier ID and cost centre.

On the GLA figures, there’ a similar story, with the type of data and the names used to describe changing seemingly randomly between data sets. Some of the 2009 ones do have transaction dates, but the 2010 one generally don’t, and the supplier field has different names, from Supplier to Supplier Name to Vendor.

This is not to criticise those bodies – it’s difficult to produce consistent data if you’re making the rules up as you go along (and given there weren’t any established precedents that’s what they were doing), and doing much of it by hand. Also, they are doing it first and helping us understand where the problems lie (and where they don’t). In short they are failing forward –getting on with it so they can make mistakes from which they (and crucially others) can learn.

But who are these suppliers?

The bigger problem, as I’ve said before, is being able to identify the suppliers, and this becomes particularly acute when you want to compare across bodies (who may name the same company or body slightly differently). Ideally (as we put in the first draft of the proposals), we would have the company number (when we’re talking about a company, at any rate), but we recognised that many accounts systems simply won’t have this information, and so we do need some information that helps use identify them.

Why do we want to know this information? For the same reason we want any ID (you might as well ask why Companies House issues Company Numbers and requires all companies to put that number on their correspondence) – to positively identify something without messing around with how someone has decided to write the name.

With the help of the excellent Companies Open House I’ve had a go at matching the names to company numbers, but it’s only been partially successful. When it is, you can do things like this (showing spend with other councils on a suppliers’ page):

It’s also going to allow me to pull in other information about the company, from Companies House and elsewhere. For other bodies (i.e. those without a company number), we’re going to have to find another way of identifying them, and that’s next on the list to tackle.

Thoughts on those spending data guidelines

In general I still think they’re fairly good, and most of the shortcomings have been identified in the comments, or emailed to us (we didn’t explicitly state that the data should be available under an open licence such as the one at data.gov.uk, and be definitely should have done). However, adding this data to OpenlyLocal (as well as providing a useful database for the community) has crystalised some thoughts:

- Identification of the bodies is essential, and it think we were right to make this a key point, but it’s likely we will need to have the government provide a lookup table between VAT numbers and Company Numbers.

- Speaking of Government datasets, there’s no way of finding out the ancestry of a company – what its parent company is, what its subsidiaries are, and that’s essential if we’re to properly make use of this information, and similar information released by the government. Companies House bizarrely doesn’t hold this information, but the Office For National Statistics does, and it’s called the Inter Departmental Business Register. Although this contains a lot of information provided in confidence for statistical reasons, the relationships between companies isn’t confidential (it just isn’t stored in one place), so it would be perfectly feasible to release this information.

- We should probably be explicit whether the figures should include VAT (I think the Windsor & Maidenhead ones don’t include it, but the GLA imply that theirs might).

- Categorisation is going to be a tricky one to solve, as can be seen from the raw data for Windsor & Maidenhead – for example the Children’s Services Directorate is written as both Childrens Services & Children’s Services, and it’s not clear how this, or the subcateogries, ties into standard classifications for government spending, making comparison across authorities tricky.

- I wonder what would be the downside to publishing the description details, even, potentially, the invoice itself. It’s probably FOI-able, after all.

As ever, comments welcome, and of course all the data is available through the API under an open licence.

C

Open data meme suggestion: Enabler or blocker?

Earlier today I gave a presentation at the Open Knowledge Conference on open local data, OpenlyLocal and the Open Election Data project. It was a slight update of the talk I gave to the Manchester Social Media Cafe earlier in the month, and one of the key additions was a simple idea I added on the final page, which was about where we should go from here.

I’d been using the idea in conversation for the past months ago (and I’m sure I didn’t invent it), but it seemed to resonate with the audience, and so I thought it’s worth repeating as a short blog post, and it’s this:

When dealing with government, with organizations, with public officials, with outsourcing companies we need to develop the meme:

Are you an enabler or a blocker?

It’s a blunt and somewhat unsophisticated weapon, but in the past few months of doing the Open Election Data project, it seems to have been far more effective that any other I’ve tried — better than appealing to the public good, better than engaging on an intellectual level, better than asking for it nicely, better even than talking about potential savings.

Maybe it’s because, as someone suggested to me after the first meeting of the UK government’s Local Public Data Panel on which I sit, civil servants and other public officials only do things because there’s a benefit to them (or a downside if they don’t). [I’m not sure they’re any different than most people working in the private sector in this respect, by the way.] I don’t know, and I don’t really care. What I do care about is getting things done, and this seems to be working for me.

So, I offer it out there, not as an original idea (I’m sure it isn’t), but as a suggestion of both engaging with public bodies, and as a method of dealing with problems.

When you come across people or organisations given them the option: do you want to be an enabler or a blocker. If you’re an enabler, great, let’s see how we can make this work; if you’re a blocker, fine also — now we know we’ll just go around you and get on with it anyway.

The Audit Commission, open data and the quest for relevance

[Note: I’m writing this post in a personal capacity, not on behalf of the Local Public Data Panel, on which I sit]

Alternative heading: Audit Commission under threat tries to divert government open data agenda. Fails to get it.

A week or so ago saw the release of a report from the Audit Commission, The Truth Is Out There, which “looks at how the public sector can improve information made available to the public”.

The timing of this is interesting, coming shortly before the Government’s landmark announcement about opening up data and using it to restructure government, and after a series of Conservative-leaning events or announcements making a similar case, albeit framed slightly differently.

Given all this, I’m guessing the Audit Commission is a tough places to be right now. Local Authorities have long complained about the burden it puts on them, the Conservatives have made it plain they see it as a problem rather than a solution so far as efficiency goes, and even the government is scaling back its desire to have targets for everything.

So, given this, perhaps this paper would see a realisation by the commission that if it doesn’t change its perspective it will become at best irrelevant and at worst a roadblock to open data, increased transparency, efficiency and genuine change.

First it’s worth pointing out some background:

- The Audit Commission has not been active in the open public data movement and in fact has registered zero datasets at data.gov.uk (a search of the site reveals one dataset mentioning them but that turns out to have been published by the Department of Communities & Local Govt).

- Rather than open up its data it has preferred to spend money on portals such as oneplace that have a heavy overlap with other government ones.

- Its website is a mess, almost impossible to navigate, and with some key data only available as inaccessible PDFs.

- The data is very difficult to combine with other data – the Commission don’t use the same identifiers for local authorities as other public bodies and there’s no clear way to combine their IDs with other ones (e.g. ONS SNAC ids).

In short, it’s a typical government body — all focused on process rather than delivery. And its response to the changing landscape of open data, the move from a web of documents to a web of data, and the potential to engage with data directly rather than through the medium of dry official reports?

Actually it’s what you’d expect: there’s a fair bit of social-media blah-blah-blah — Facebook, US open data initiatives, MySociety/FixMyStreet, etc; there’s a bit about transparency that doesn’t actually say much; and then there’s a lot of justification for why there needs to be an Audit-Commission type body which manages to both include jargon (RQP) and avoid talking about the real problems preventing this.

What are these?

- Structural problems — although the net financial benefit to government as a whole will be significant, this will be achieving by stripping out existing wasteful processes, duplication, and intermediary organizations. The idea that a local authority should supply the same dataset to three different bodies in three different formats and three different ways is ludicrous. Particularly when those bodies then spend even more time reworking the data to allow a matchup to other datasets.

This is just an unnecessary gunk that’s gumming up the work, and the truth is the Audit Commission is one of those problem bodies.

- Technical/contractual problems — it’s not always easy for legacy systems to expose data, and even where it is, the nature of public-sector IT procurement means that it’s going to cost. Ultimately we need to change how government does IT, but in the meantime we need to make sure the money comes from the vast savings to be made be removing the gunk. This means overcoming silos, which is no easy task.

- Identifier problems — being able to uniquely identify bodies, areas, categories, etc. Anyone who’s ever done any playing around with government data knows this is one of the central frustrations, and blockers when combing data. Is this local authority/ward/police authority/company the same as that one. What do we mean by ‘primary school’ spending and can we match it against this figure from central government. Some of these questions are hard to answer, but made much harder when organisations don’t use common, public identifiers.

Astonishingly the Audit Commission paper doesn’t really cover these issues (and doesn’t even mention the issue of identifiers, perhaps because it’s so bad at them). Is this because they haven’t really understood the issues, or is it because the paper is more about trying to make it seem relevant in a changing world? Either way, it’s got problems, and given the current attitude it doesn’t seem in a position to address them itself.

Introducing the Open Election Project: tiny steps towards opening local elections

Update: The Open Election Data project is now live at http://openelectiondata.org.

Here’s a fact that will surprise many: There’s no central or open record of the results of local elections in the UK.

Want to look back at how people voted in your local council elections over the past 10 years? Tough. Want to compare turnout between different areas, and different periods? Sorry, no can do. Want an easy way to see how close the election was last time, and how much your vote might make a difference? Forget it.

It surprised and faintly horrified me (perhaps I’m easily shocked). Go to the Electoral Commission’s website and you’ll see they quickly pass the buck… to the BBC, who just show records of seat numbers, not voting records.

In fact, there is an unofficial database of the election results — held by Plymouth University, and this is what they do (remember we’re in the year 2010 now):

“We collect them and then enter them manually into our database. This process is begun in February where we assess which local authority wards are due up for election, continues during March and April when we collect electorates and candidate names and then following the elections in May (or June in some years) we contact the local authorities for their official results”

Not surprisingly, the database is commercial (I guess they have to pay for all that manual work somehow), though they do receive some support from the Electoral Commission, which means as far as democracy, open analysis, and public record goes, it might as well not exist.

There are, of course, records of local election results on local authority websites, but accessible/comparable/reusable they ain’t, nor are they easy to find, and they are in so many different formats that it makes getting the information out of them near impossible, except manually.

So in the spirit of scratching your own itch (I’d like to put the information on OpenlyLocal.com, and I’m sure lots of other bodies would too, from the BBC to national press), I came up with a grandiose title and a simple plan: The Open Election Data project, an umbrella project to help local authorities to publish their election results in an open, reusable common format.

I had the idea at the end of the first meeting of the Local Public Data Panel, of which I’m a member and which is tasked with finding ways of opening up local public data. I then did an impromptu session at the UK Gov Barcamp on January 23, and got a great response. Finally I had meetings and discussions with all sorts of people, from local govt types, local authority CMS suppliers, council webmasters, returning officers and standards organisations. Finally, it was discussed at the 2nd Local Public Data Panel meeting this week, and endorsed there.

So how does it work? Well, the basic idea is that instead of councils writing their election results web pages using some arbitrary HTML (or worse, using PDFs), they use HTML with machine-readable information added into it using something called RDFa, which is already used by many organisations for the this purpose (e.g. for government’s consultations).

This means that pretty much any competent Content Management System should be able to use the technique to expose the data, while still allowing them to style it as they wish. Here, for example, is what one of Lichfield District Council’s election results pages currently looks like:

And this is what it looks like after it’s had RDFa added (and a few more bits of information):

As you can see (apart from the extra info), there appears to be no change to the user. The difference is however, that if you point a machine capable of understanding RDFa at it, you can slurp up the results, and bingo, suddenly you’ve got an election results database for free, putting local elections on a par with national ones for the first time.

So where do things go from here?

- There’s an overview of the project (this will eventually get published with the Local Public Data Panel minutes on the panel’s part of data.gov.uk);

- A draft demonstration markup with comments — this is based on Lichfield’s District Council’s election results (you can compare it with the original — just view source in your browser). Full specifications/instructions will be appearing in the next week or two, but in the meantime do have a look at the code and contact me with any questions.

- You can also see how an RDF-reader would see the information on the demonstration page as XML (RDF/XML to be precise) or as an alternative notation called turtle.

I’m also presenting this at the localgovcamp tomorrow(March 4), and we hope to have some draft local authority election results pages in the weeks shortly afterwards (although the focus is on getting as many councils to implement this by the local elections on May 6, there’s nothing to stop them using it on existing pages, and indeed we’d encourage them to, so they can get a feel for and indeed expose those earlier results). I’m also discussing setting up a Community of Practice to help enable council webmasters discuss implementation.

Finally, many thanks to those who have helped me draw up the various RDFa stuff and/or helped with the underlying idea: especially Jeni Tennison, Paul Davidson from LeGSB, Stuart Harrison of Lichfield District Council, Tim Allen of the LGA, and many more.